#By accessing the list, we can get the information of each hand's corresponding flag bit for handlandmark in results. multi_hand_landmarks : #list of all hands detected.

process ( imgRGB ) #completes the image processing. COLOR_BGR2RGB ) #Convert to rgb #Collection of gesture information results = hands.

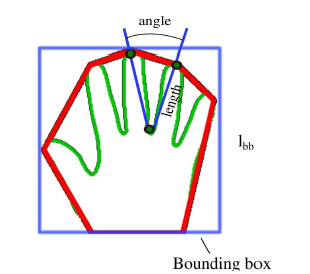

read () #If camera works capture an image imgRGB = cv2. GetVolumeRange () while True : success, img = cap. _iid_, CLSCTX_ALL, None ) volume = cast ( interface, POINTER ( IAudioEndpointVolume )) volbar = 400 volper = 0 volMin, volMax = volume. drawing_utils #To access speaker through the library pycaw devices = AudioUtilities. Hands () #complete the initialization configuration of hands mpDraw = mp. hands #detects hand/finger hands = mpHands. VideoCapture ( 0 ) #Checks for camera mpHands = mp. Import cv2 import mediapipe as mp from math import hypot from ctypes import cast, POINTER from comtypes import CLSCTX_ALL from pycaw.pycaw import AudioUtilities, IAudioEndpointVolume import numpy as np import cv2 import mediapipe as mp from math import hypot from ctypes import cast, POINTER from comtypes import CLSCTX_ALL from pycaw.pycaw import AudioUtilities, IAudioEndpointVolume import numpy as np cap = cv2. We can use it to extract the coordinates of the key points of the hand. It uses machine learning (ML) to infer 21 key 3D hand information from just one frame. MediaPipe Hands is a high-fidelity hand and finger tracking solution.

Mediapipe is an open-source machine learning library of Google, which has some solutions for face recognition and gesture recognition, and provides encapsulation of python, js and other languages. NumPy is a library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays. The objective of this project is to develop an interface which will capture human hand gesture dynamically and will control the volume level.

Thecomputer then makes use of this data as input to handle applications. In this project for gesture recognition, the human body’s motions are read by computer camera. This helps to build a more potent link between humans and machines, rather than just the basic text user interfaces or graphical user interfaces (GUIs). Gesture recognition helps computers to understand human body language.

0 kommentar(er)

0 kommentar(er)